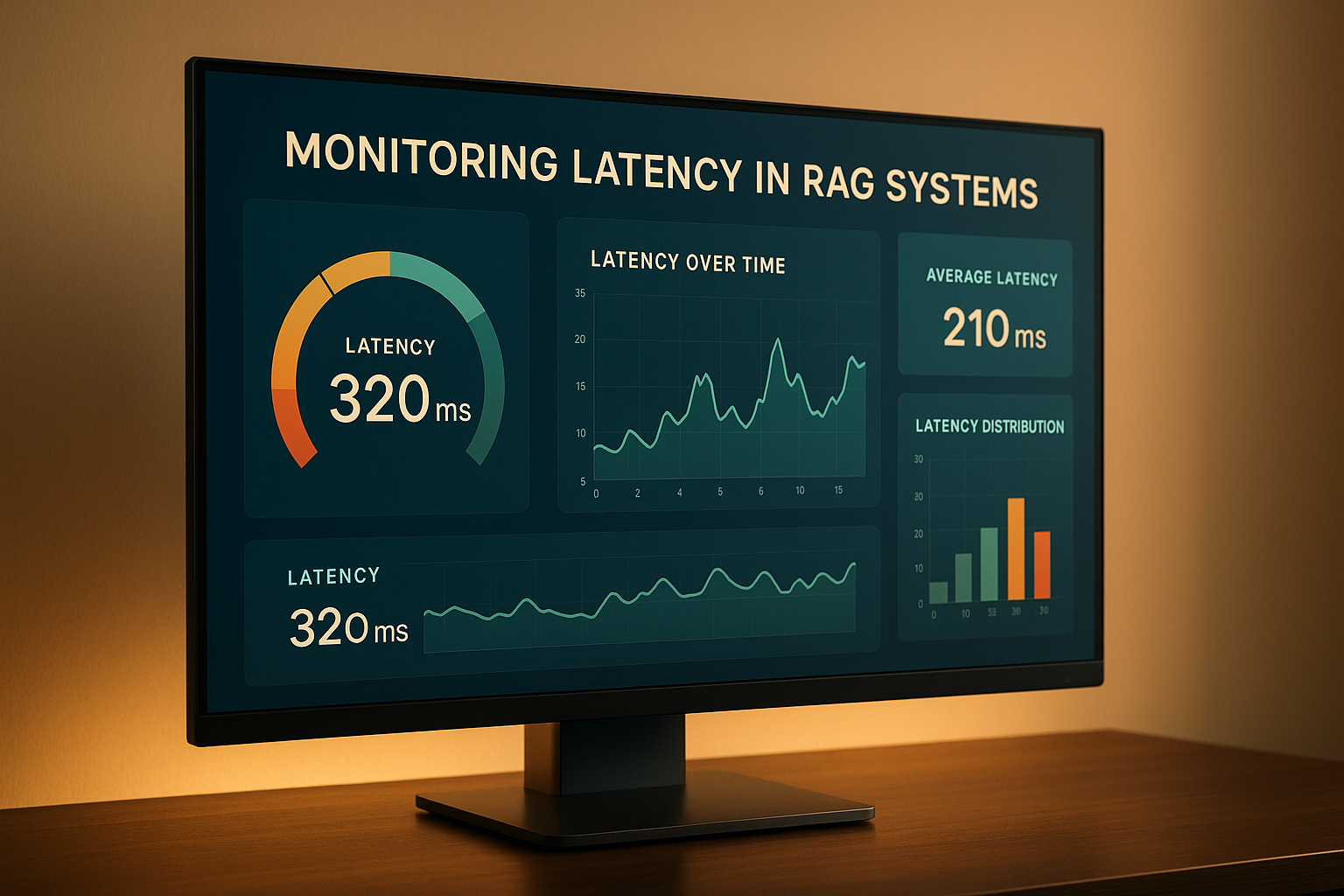

Slow RAG systems frustrate users and waste resources. Monitoring latency is your first step to solving this. Latency in Retrieval-Augmented Generation (RAG) systems comes from three key areas: retrieval, generation, and network overhead. Each adds delays that can ruin user experiences and increase costs.

Here’s what you need to know upfront:

End-to-end response time is the go-to metric for assessing overall system health. It reflects the complete user experience, measuring the time from when a query is submitted to when the final response is delivered. For RAG systems, maintaining quick and uninterrupted responses is essential.

Retrieval latency tracks the time it takes for your system to fetch relevant documents from the knowledge base. Monitoring this separately is critical since delays here often point to issues with your search infrastructure or index setup.

Generation latency measures how long the language model takes to generate a response once it has the retrieved context. Larger models typically take longer to respond compared to smaller ones, making this metric a major contributor to total latency. Keeping a close eye on this can help identify potential bottlenecks.

Component-specific latencies break down the entire process into smaller, measurable steps. By tracking individual parts like context preparation or preprocessing, you can pinpoint exactly where slowdowns occur, making troubleshooting more efficient.

Percentile measurements provide a deeper look at performance under different loads. These metrics help identify occasional outliers that might negatively impact the user experience. When combined with throughput and error rates, they offer a well-rounded view of system performance.

To fully understand system performance, you'll need to go beyond core latency metrics.

Throughput measures how many requests your system can handle in a given time frame. This becomes especially important as your RAG system scales and faces varying workloads.

Error rates directly affect perceived latency, as failed requests often need to be retried. Tracking issues like timeouts, retrieval errors, and generation failures can help you isolate and address problems that might otherwise distort latency metrics.

Resource utilization - including CPU, memory, and GPU usage - can reveal hardware limitations that might lead to latency spikes. This is especially crucial when dealing with large document collections or resource-intensive tasks.

Queue depth and concurrent request handling shed light on how your system performs under simultaneous queries. Systems that process requests sequentially may experience growing latency as queues build up, while parallelized systems can maintain steadier performance.

Regularly monitor hardware metrics like CPU, memory, GPU usage, and cache hit rates to quickly identify and address resource bottlenecks.

Your monitoring strategy should align with the specific needs of your RAG deployment.

For customer-facing applications, speed and consistency are non-negotiable. These systems should focus on minimizing response times and monitoring higher percentile metrics to spot occasional delays. Setting alerts for performance thresholds ensures quick action when issues arise.

In real-time scenarios like live customer support, aggressive latency targets are crucial. Here, percentile measurements provide a clearer view of performance under load compared to simple averages.

For batch processing systems, throughput often matters more than individual response times. Monitoring total processing time for document batches and keeping an eye on resource usage can help optimize efficiency.

High-volume systems should prioritize scalability. Keep track of how latency shifts as user numbers grow, monitor queue depths during peak times, and evaluate how well the system recovers after traffic surges.

If cost is a concern, balancing latency with resource use becomes critical. Sometimes, slightly higher latency is acceptable if it results in better resource efficiency and lower operational costs.

Ultimately, tailor your monitoring to match user expectations and the demands of your use case. It’s all about finding the right balance between performance and resource management.

When it comes to keeping a close eye on latency in Retrieval-Augmented Generation (RAG) systems, Application Performance Monitoring (APM) platforms are a must-have. Tools like New Relic, Datadog, and Dynatrace offer visibility across your entire application stack. They can track distributed traces throughout the RAG pipeline, from the moment a query is initiated to when the final result is generated.

For teams looking for more customization, Prometheus with Grafana is an excellent open-source option. Prometheus gathers time-series metrics from various RAG components, while Grafana transforms that data into easy-to-read dashboards. This setup is ideal for those who want granular control over their monitoring.

If log aggregation is your focus, the Elastic Stack (ELK) is a powerful choice. By generating logs at each stage of the RAG process, Elasticsearch can index the data, and Kibana can create real-time dashboards that highlight latency trends and pinpoint bottlenecks.

For precise tracking, custom instrumentation libraries like OpenTelemetry allow you to monitor specific RAG operations. You can measure timing for tasks like retrieval queries, context preparation, and model inference, sending this data to a backend of your choice, whether it’s a commercial APM or a custom-built system.

Finally, cloud-native monitoring services such as AWS CloudWatch, Google Cloud Monitoring, and Azure Monitor integrate seamlessly with your infrastructure. These services automatically collect basic metrics and can be extended with custom measurements tailored to your RAG workflow.

Once you’ve chosen your tools, the next step is configuring them for effective latency tracking.

To see how these tools come together in practice, consider a few real-world scenarios.

Many production environments use a hybrid approach, combining APM platforms for general monitoring with custom metrics that target RAG-specific operations. This setup offers a mix of ready-to-use features and tailored insights.

Teams often track custom business metrics, such as the time it takes to retrieve the first relevant document or the overhead of context preparation. These metrics provide a direct view of RAG performance.

Automated alerting workflows can trigger actions when latency exceeds acceptable thresholds. For instance, alerts might automatically scale up compute resources or switch to faster retrieval methods to maintain performance.

Establishing a performance baseline is another critical step. By collecting data under various load conditions, you can document your system’s normal performance range and identify deviations quickly. This baseline also helps with capacity planning and optimization.

Lastly, integrating monitoring tools into existing workflows ensures that performance data reaches the right people. Connecting these tools to incident management systems, chat platforms, or ticketing systems ensures that any issues are promptly addressed.

Choosing the right tools and setting them up thoughtfully can make all the difference in managing latency in RAG systems. Start with basic monitoring, and as your system evolves, expand your setup to capture more detailed insights and optimize performance over time.

Building on earlier latency diagnostics, these strategies aim to address bottlenecks in both retrieval and generation. Use monitoring data to focus improvements where they’ll have the most impact.

Speeding up the retrieval phase starts with optimizing vector database operations. Algorithms like HNSW (Hierarchical Navigable Small World) and IVF (Inverted File) can process queries faster while maintaining a balance between speed and precision.

Another effective approach is reducing embedding dimensions using techniques like PCA (Principal Component Analysis) or learned methods. This not only accelerates similarity searches but also improves memory efficiency.

To further enhance retrieval, hybrid strategies can be employed. For instance, begin with a quick, broad search to identify a smaller pool of candidate documents. Then apply a more detailed ranking process to this subset, saving time and resources.

Caching frequently accessed embeddings using methods like an LRU (Least Recently Used) cache can also cut down on redundant calculations, which is especially useful for repeated queries.

Finally, keep your vector indexes optimized. Regularly rebuilding indexes and partitioning large datasets into smaller, more relevant subsets ensures faster and more precise searches.

These adjustments in retrieval pave the way for smoother and quicker response generation.

Reducing generation latency starts with streaming responses. By sending tokens as soon as the language model begins generating output, users experience less delay.

Caching is another powerful tool. For example, store common responses and use semantic checks to match new queries with cached results. This works particularly well for FAQ-like applications.

You can also speed up inference times with model optimization techniques like quantization (reducing model precision) and model distillation (creating smaller, faster models).

Parallel processing during context preparation is a game-changer when handling many documents. Instead of analyzing and ranking documents sequentially, process them simultaneously to save time.

Lastly, use smart context truncation to focus on the most relevant parts of retrieved documents. This reduces the amount of text sent to the language model without compromising response quality.

These generation-focused strategies can significantly improve response times while maintaining accuracy.

A well-designed system can prevent bottlenecks and improve overall efficiency. Start by implementing asynchronous processing to allow different operations to run independently. For example, using message queues between retrieval and generation components ensures each stage functions smoothly without delays.

Connection pooling is another key optimization. By reusing database and API connections instead of creating new ones for every request, you can cut down on overhead and improve response times.

Deploying components closer to users is essential for reducing network delays. For global applications, content delivery networks (CDNs) are invaluable for serving static resources quickly.

To handle varying workloads, use auto-scaling to adjust compute resources based on real-time demand indicators like response times or queue depth.

Efficient memory management is also critical. Avoid garbage collection pauses by using memory pools for frequent allocations and properly cleaning up large data structures to prevent latency spikes.

Finally, batch processing of similar queries can save resources by spreading the cost of retrieval and generation across multiple requests. Regular performance profiling - analyzing CPU usage, memory allocation, and I/O operations - helps identify and resolve new bottlenecks as your system evolves.

Once you've implemented measures to reduce latency, the work doesn’t stop there. Keeping your RAG system running smoothly requires constant monitoring and fine-tuning. Over time, factors like data growth, shifting user behavior, and system updates can chip away at performance. Regular adjustments ensure your system remains responsive and efficient, adapting to these changes without losing the gains you've achieved.

Set up weekly automated tests to simulate real-world traffic, including peak loads - up to three times your usual activity. These tests establish a performance baseline, helping you quickly spot any signs of degradation.

Synthetic monitoring is a valuable tool for this. It runs predefined queries at regular intervals, offering continuous insights into system performance. Design these tests to include a variety of query types, such as straightforward factual questions, intricate multi-part queries, and edge cases with unusual formatting. By monitoring response times for each category separately, you can pinpoint which operations are most impacted by changes.

Track key metrics like average response times, 95th percentile latency, and error rates using a performance dashboard. The monitoring tools mentioned earlier will help you capture and analyze these metrics over time. This historical data is essential for diagnosing issues and planning system upgrades.

Regression testing is another must. Before rolling out any updates, run a full test suite to ensure new features or optimizations don’t unintentionally slow things down. Always have a rollback plan in place in case performance takes a hit.

Once you’ve established a performance baseline, keeping your models and algorithms up to date is crucial to avoiding future latency problems.

Model updates can have a major impact - sometimes for better, sometimes for worse. Always test new language models in a staging environment before deploying them to production. While newer models often improve accuracy, they may require new optimization techniques to maintain speed.

Embedding model upgrades should be approached with care, as they affect your entire vector database. When introducing a new embedding model, consider running it alongside the old one during a transition period. This allows you to compare results and ensure the new model meets or exceeds the previous performance standards.

For algorithm optimization, focus on areas flagged by your monitoring data. If retrieval operations show slower response times, experiment with different indexing algorithms or similarity search methods. Document the effects of each change to build a knowledge base for future reference.

When rolling out significant updates, use gradual rollouts to minimize risks. Start by applying changes to a small percentage of traffic, monitoring the results closely. If everything looks good, gradually expand the rollout. This approach helps catch issues early and makes it easier to revert changes if needed.

Maintain detailed change logs to track system modifications alongside performance data. When latency spikes occur, these logs can help you quickly identify the cause and guide decisions for future updates.

Set up automated alerts to flag when response times double your baseline or when error rates exceed 1%. Combine this with direct user feedback to catch problems that might not show up in your metrics. Adjust alert thresholds for different traffic periods to ensure you catch both sudden spikes and slow performance declines.

Establish clear escalation procedures to address critical issues promptly. Assign ownership for specific types of alerts and set response time expectations. For example, a system-wide slowdown might require immediate attention, while a gradual decline can be addressed during regular business hours.

After any significant performance issue, conduct a root cause analysis. Document what went wrong, how it was fixed, and what steps can prevent a recurrence. This process builds institutional knowledge and improves your team’s ability to handle future incidents.

Analyze user feedback alongside system metrics to identify patterns. For example, if users report slow responses during certain times, dig into your monitoring data for those intervals to uncover the underlying causes.

Finally, conduct regular performance reviews - monthly is a good cadence. Use these reviews to evaluate your monitoring and alerting strategies, close any gaps in coverage, and adjust thresholds as your system grows and user behavior evolves. This ensures your monitoring evolves alongside your system, keeping it ready for whatever comes next.

Keeping latency in check is key to building AI applications that users trust and enjoy. In this guide, we’ve broken down the main sources of latency - from retrieval operations to generation processes - and shown how understanding these areas sets the stage for smarter optimization.

Tracking metrics is only the beginning. By putting proper monitoring tools and processes in place, you can catch and address potential issues before they impact users.

Cutting down latency involves fine-tuning vector databases, improving retrieval algorithms, and efficiently managing system resources. As your RAG system grows and user needs shift, new challenges will undoubtedly arise. But the strategies we’ve outlined - like automated testing and gradual rollouts - will help your system adapt without losing its edge. Regular performance reviews, proactive alerts, and user input create a continuous improvement loop that keeps your system fast and reliable.

Every millisecond you save directly enhances the experience for your users. They expect quick, precise answers from AI systems, and meeting those expectations takes consistent effort and the disciplined approach to monitoring and optimization we’ve explored here.

Reducing latency in retrieval-augmented generation (RAG) systems involves fine-tuning both the retrieval and generation processes for speed and efficiency.

On the retrieval side, one effective approach is caching frequently used embeddings or results to eliminate redundant computations. Beyond that, refining indexing techniques, optimizing how queries match data, and employing smart chunking strategies can make the retrieval process smoother and faster.

For the generation phase, cutting down the number of tokens processed - whether in the input or output - can significantly reduce response times. Leveraging hardware acceleration, such as GPUs or TPUs, further enhances processing speed, which is particularly beneficial for interactive applications where quick responses are essential.

Consistently tracking system metrics is vital to ensure performance stays on track, aiming for response times within the 1–2 second range for real-time applications.

When you look at percentile measurements - like the 95th or 99th percentile - you get a clear picture of how your RAG system handles its slowest responses, especially during times of peak demand. These metrics focus on the delays experienced by the slowest requests, offering a window into potential performance bottlenecks.

Tracking these percentiles allows you to identify and tackle latency issues, fine-tune response times, and maintain steady system performance, even under heavy traffic. This ensures a smoother experience for users and boosts your system's dependability.

Prometheus and Grafana are two standout tools for keeping tabs on latency in retrieval-augmented generation (RAG) systems. Prometheus specializes in gathering and storing detailed performance metrics, while Grafana shines by offering highly customizable dashboards to visualize and analyze that data. When used together, they provide real-time insights into system performance, making it easier to pinpoint and address latency issues as they arise.

Both tools are open-source and incredibly versatile, which has made them popular choices for complex AI setups and large language model (LLM) deployments. They’re also scalable and budget-friendly, making them a smart option for organizations aiming to fine-tune latency monitoring in their RAG systems.

.png)

.png)