Fine-tuning large language models is expensive and time-consuming. But Parameter-Efficient Fine-Tuning (PEFT) and Quantized Low-Rank Adaptation (QLoRA) are changing that. These methods make fine-tuning faster, less resource-intensive, and more accessible to businesses with limited budgets or hardware.

Key Points:

Quick Overview:

Quick Comparison:

| Aspect | PEFT | QLoRA |

|---|---|---|

| Memory Usage | Moderate, requires full model | Low, compresses base model |

| Hardware Needs | Moderate GPU memory | Consumer-grade GPUs |

| Training Speed | Faster for smaller models | Optimized for large models |

| Precision | Full precision | Minor precision trade-offs |

| Best For | Stable, multi-task fine-tuning | Cost-efficient, large-model use |

Choose PEFT for simplicity and stable performance. Opt for QLoRA if you need to fine-tune large models on limited hardware.

When it comes to overcoming the hurdles of traditional fine-tuning, PEFT and QLoRA step in as practical, resource-friendly alternatives. These methods focus on refining specific parts of a model, cutting down on the need for extensive resources, and opening the door to a wider range of applications. Here's a closer look at how each works and what they bring to the table.

Parameter-Efficient Fine-Tuning (PEFT) is all about efficiency. Instead of tweaking an entire large language model, PEFT zeroes in on updating a small subset of parameters. How? By adding compact, trainable adapters to the existing, fixed base model. This approach slashes memory usage and computational costs while still taking full advantage of the base model's capabilities.

For example, businesses partnering with Artech Digital can use PEFT to quickly roll out tailored AI solutions, whether it's a chatbot fine-tuned for a specific industry or a document analysis system designed for niche tasks.

One standout technique within the PEFT framework is Low-Rank Adaptation (LoRA). LoRA simplifies the process even further by breaking weight updates into smaller matrices. This allows the model to adapt to specific tasks without touching its core parameters. The result? Lower memory demands and faster training times.

Quantized Low-Rank Adaptation (QLoRA) takes PEFT's efficiency a step further by introducing low-bit quantization into the mix. While PEFT focuses on reducing the number of parameters that need updating, QLoRA compresses the base model itself using techniques like 4-bit quantization. This process converts high-precision numbers into compact, lower-bit formats, dramatically shrinking the model's memory footprint.

But here’s the clever part: QLoRA doesn’t sacrifice precision. It keeps the newly added adapter parameters in high-precision formats during training. This hybrid strategy makes it possible to fine-tune even large models using high-performance consumer GPUs, sidestepping the need for expensive enterprise-grade hardware.

For businesses, QLoRA is a game-changer. It makes advanced model fine-tuning affordable and accessible, enabling companies to create custom AI tools without breaking the bank on infrastructure. Whether you're working with limited resources or aiming to maximize efficiency, QLoRA opens up exciting possibilities for specialized AI applications.

Building on the earlier overviews, let’s dive into a side-by-side comparison of PEFT and QLoRA across key performance areas. While both methods aim to reduce resource demands and enable efficient fine-tuning, they shine in different scenarios depending on specific needs and limitations.

In terms of memory usage, QLoRA has a clear edge by compressing the base model using 4-bit quantization. On the other hand, PEFT focuses on fine-tuning a smaller subset of parameters, but it requires loading the entire original model in full precision, which can become a bottleneck with very large models.

PEFT’s approach of training only adapter layers keeps computational costs low. However, QLoRA introduces an extra quantization step, which can slightly increase computational demands depending on the project’s requirements. Beyond memory and computation, the two methods also differ in training speed and scalability.

Training speed depends heavily on the hardware setup and the model size. PEFT is particularly efficient for small- to medium-sized models because it updates only a limited number of parameters, streamlining the process. QLoRA, however, shines when working with larger models. Its reduced memory footprint enables these models to run on consumer-grade hardware, making it possible to use larger batch sizes and train more smoothly in memory-constrained setups.

When it comes to scalability, QLoRA’s ability to fine-tune large models on standard hardware levels the playing field. This makes advanced fine-tuning accessible to smaller teams or organizations, especially in deployment scenarios where compressed models are a necessity.

These technical distinctions translate into different business applications, depending on the goals and limitations of the user.

PEFT is well-suited for applications requiring consistent performance with moderate resource usage. Examples include customer service chatbots or document analysis systems, where stability and reliability are key. Its modular design also allows multiple task-specific fine-tuning efforts to share the same base model, making it a cost-effective option for organizations with diverse AI needs.

QLoRA, on the other hand, is ideal for startups, research teams, and smaller businesses operating with limited hardware. It’s particularly useful for prototype development, proof-of-concept projects, and edge AI applications, where deploying a compressed model is a significant advantage.

| Aspect | PEFT | QLoRA |

|---|---|---|

| Memory Reduction | Updates a limited set of adapter parameters | Compresses the base model for greater savings |

| Training Speed | Optimized for small-to-medium models | Benefits large models on limited hardware |

| Hardware Requirements | Requires moderate GPU memory | Runs on consumer-grade GPUs |

| Model Stability | Maintains full precision for the base model | May involve minor precision trade-offs |

| Deployment Size | Retains standard model format | Supports compressed deployment |

| Best For | Organizations with established infrastructure | Resource-constrained environments |

| Learning Curve | Straightforward | Requires understanding of quantization |

Ultimately, the choice between PEFT and QLoRA depends on your hardware setup and performance goals. For businesses with access to robust computational resources, PEFT offers a stable and straightforward path to fine-tuning. On the flip side, QLoRA is a game-changer for those with tighter hardware constraints or for projects requiring compact deployment, like mobile AI or edge computing.

For those working with Artech Digital, the decision will hinge on the specific use case and deployment needs. Real-time customer interaction systems might lean toward PEFT for its stability, while mobile or edge AI applications are likely to benefit from QLoRA’s compact and efficient design.

Once you've weighed the efficiencies of PEFT and QLoRA, the next step is putting these techniques into action using specialized libraries. Thankfully, implementing these methods is straightforward, thanks to tools that simplify the process - even for teams without extensive machine learning expertise. By leveraging these libraries, you can transition from theory to practice without a steep learning curve.

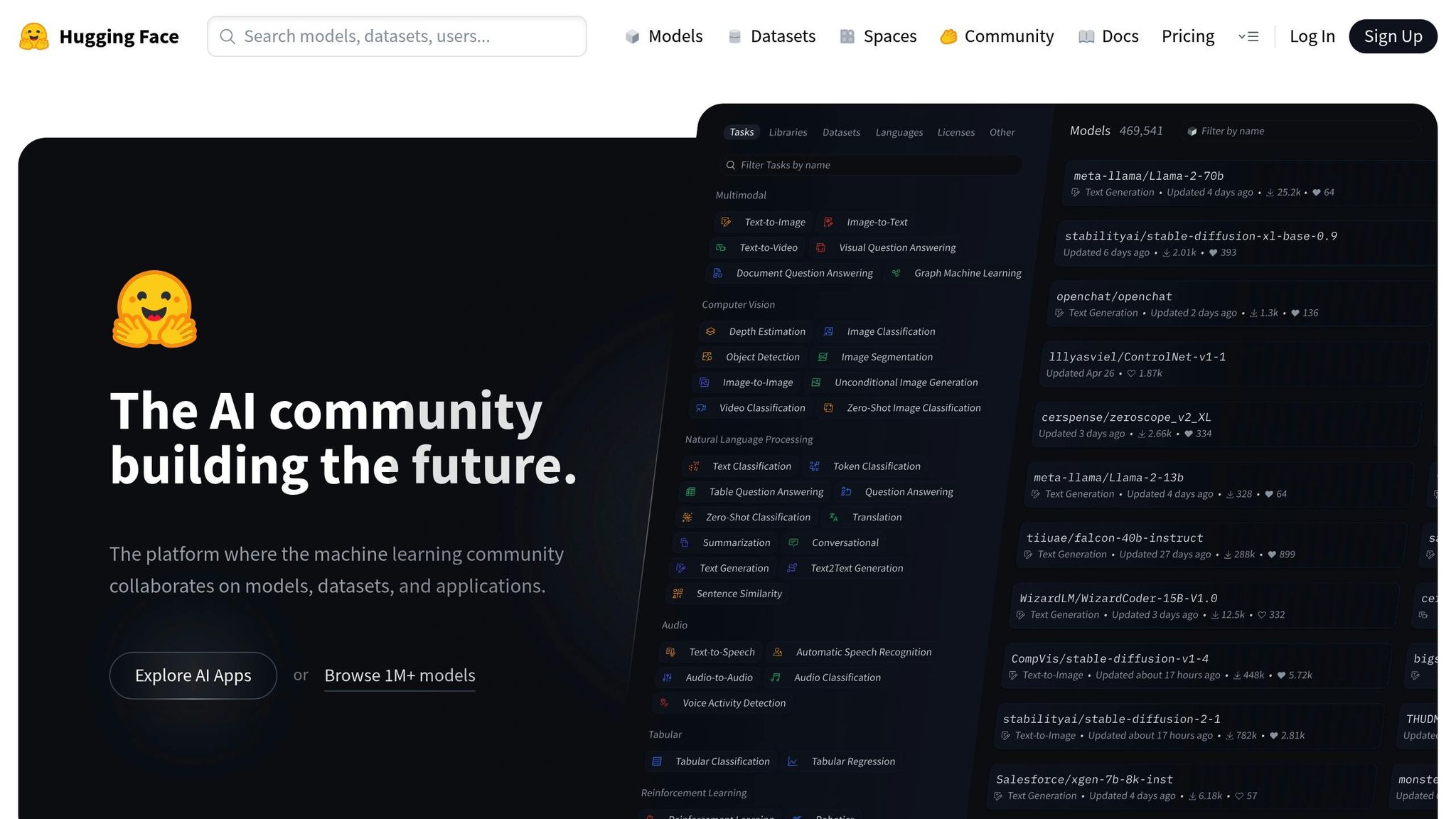

The Hugging Face PEFT library is a go-to solution for parameter-efficient fine-tuning. It integrates seamlessly with the broader Hugging Face ecosystem, making it particularly convenient for teams already working with transformers and related tools.

Getting started is simple. Install the PEFT library with pip, define your adapter configuration, wrap your base model, and you're ready to train - all with just a few lines of code. The library supports various adapter types, including LoRA, and ensures that only adapter parameters are updated during training. This keeps the process efficient while maintaining compatibility with frameworks like PyTorch Lightning and Accelerate.

A standout feature of the PEFT library is its ability to add multiple adapters to a single base model. This means you can fine-tune for multiple tasks simultaneously without significant overhead. Additionally, the library handles complex tasks like gradient calculations, making it user-friendly even for teams with limited machine learning experience.

For organizations with existing infrastructure, the PEFT library integrates well with MLOps pipelines, distributed training setups, and model versioning systems. It also simplifies deployment with built-in tools for saving and loading adapters, making it easier to manage models in production.

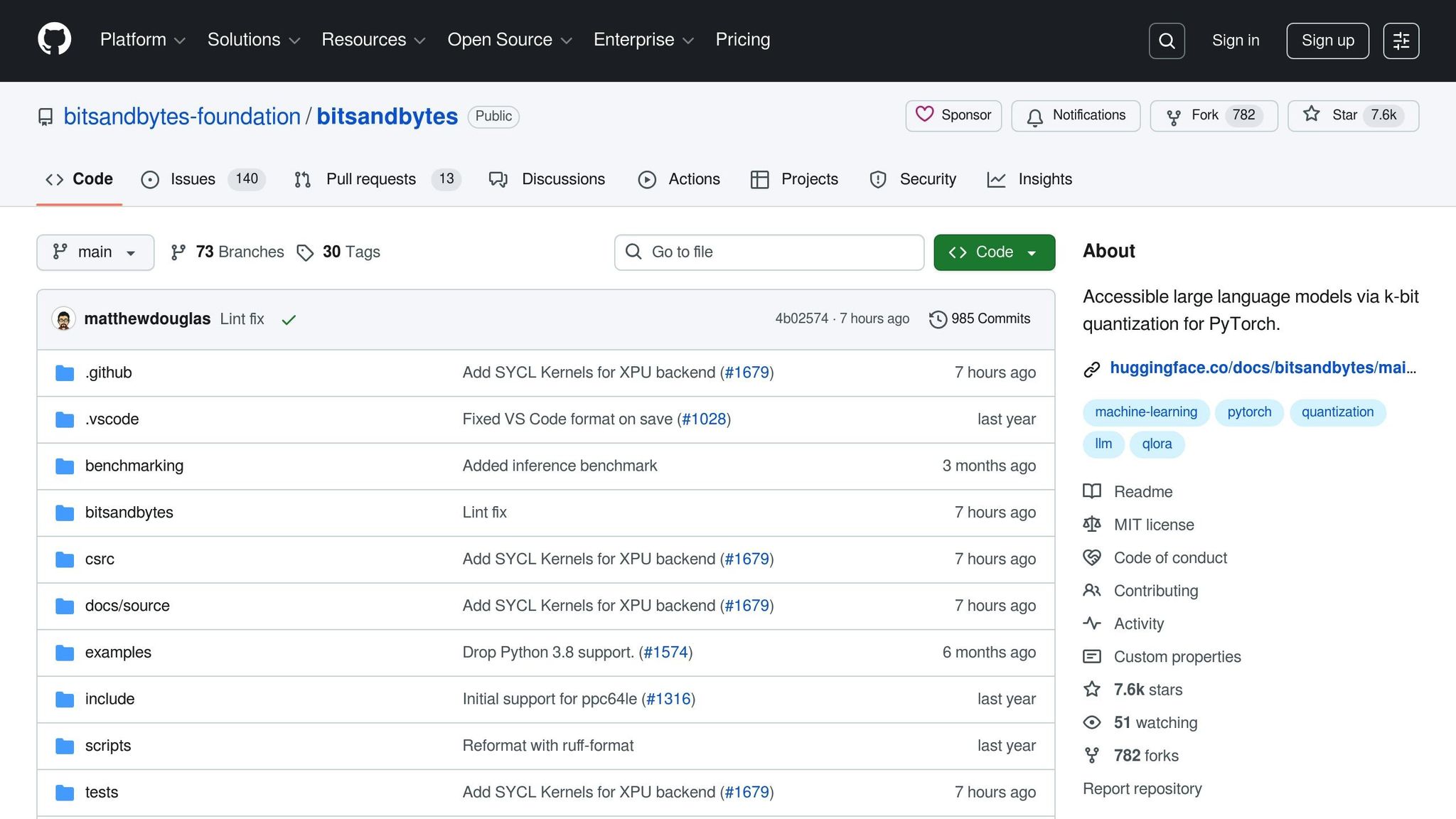

BitsAndBytes is the key to QLoRA's quantization process. This library specializes in reducing model precision from 32-bit to 4-bit, dramatically cutting memory requirements while maintaining performance.

When you load a pre-trained model, BitsAndBytes automatically compresses it in real-time, reducing memory usage compared to full-precision models. This makes it possible to run large language models on standard consumer GPUs - a game-changer for teams with limited hardware resources.

By combining BitsAndBytes with the PEFT library, you can create a complete QLoRA workflow. BitsAndBytes handles the model compression, while PEFT manages the trainable adapter layers. Together, they deliver an efficient and flexible solution for fine-tuning large models.

BitsAndBytes doesn't stop at basic quantization. It includes advanced optimization techniques like custom CUDA kernels and mixed-precision training, which improve the performance of 4-bit operations. It also has fallback mechanisms for tasks that are difficult to handle with extreme quantization, making it robust for a wide range of scenarios.

To enable quantization, you simply configure parameters during model initialization - selecting the quantization type (usually 4-bit), specifying the compute data type, and setting memory optimization flags. The library takes care of the technical details, offering a straightforward setup for diverse use cases.

The real power of these tools comes from their seamless integration. A typical QLoRA workflow involves loading a model with BitsAndBytes for quantization, applying PEFT adapters for trainable parameters, and then proceeding with fine-tuning as usual. This combination balances the memory efficiency of quantization with the flexibility of parameter-efficient fine-tuning.

Let’s break down the main differences and strengths of PEFT and QLoRA, two methods designed to make fine-tuning large language models more accessible and cost-effective.

Both PEFT and QLoRA aim to simplify fine-tuning while reducing costs, but they shine in different areas:

PEFT works well for quick iterations on smaller models, while QLoRA is better suited for handling larger models in memory-constrained scenarios. Both approaches reduce computational demands and training time, but the choice ultimately depends on the balance between resource availability and the need for precision.

The right method depends on your specific goals and limitations:

Your long-term AI strategy also plays a role. Businesses looking to scale across multiple use cases may benefit from PEFT's flexibility, while those focused on cost-effective deployment of large models should consider QLoRA’s resource-saving advantages.

For expert guidance, Artech Digital (https://artech-digital.com) offers services to help businesses fine-tune machine learning models and implement solutions like PEFT and QLoRA. Their expertise ensures you can navigate the complexities of both methods and select the one that aligns best with your needs.

When deciding, think about your team’s technical skills, available hardware, and budget. While PEFT’s simplicity can lower development costs, QLoRA is designed to cut ongoing expenses in large-scale deployments.

PEFT (Parameter-Efficient Fine-Tuning) and QLoRA each have their own strengths, particularly in terms of usability and required expertise. LoRA stands out for its simplicity and speed. By introducing low-rank matrices to existing layers, it streamlines the fine-tuning process, making it an excellent option for those with a basic understanding of deep learning.

On the other hand, QLoRA shines when it comes to memory efficiency and handling longer sequences. However, it involves more advanced steps, such as quantization, which can be challenging. This makes it a better fit for users who are comfortable navigating GPU memory optimization and tweaking quantization settings.

In short, if ease and speed are your priorities, LoRA is the way to go. But if you're aiming for top-tier performance and don't mind a more involved setup, QLoRA is worth the effort.

QLoRA's 4-bit quantization may lead to a minor drop in model accuracy due to reduced numerical precision. However, the degree of this impact largely hinges on the model's architecture and the complexity of the fine-tuning task.

Even with this trade-off, QLoRA remains a popular option. Why? It drastically cuts down computational demands while still delivering performance that's suitable for many applications. This makes it an appealing choice when faster fine-tuning and reduced hardware expenses are key priorities.

Yes, PEFT and QLoRA can work together to make fine-tuning more efficient. PEFT focuses on updating just a small portion of the model's parameters, while QLoRA uses quantization techniques to cut down on memory requirements. When combined, these methods allow for faster fine-tuning that uses fewer resources.

This combination is especially helpful when fine-tuning large language models on hardware with limited capacity. It strikes a balance by reducing memory usage and maintaining performance, making it possible to achieve excellent results without requiring high-end computational setups.

.png)

.png)