AI systems are powerful, but their energy demands are skyrocketing. Training large models like GPT-3 consumes massive electricity, leading to high costs and environmental concerns. By 2028, U.S. data centers' energy use could triple, making energy efficiency a top priority for businesses. Here's how you can cut costs and energy use without sacrificing AI performance:

These strategies don’t require heavy investments and can lead to substantial savings while helping businesses meet stricter energy regulations.

Building energy-efficient AI systems starts with making smart architectural decisions. The structure and type of model you choose can significantly cut down computational demands while maintaining performance. These choices not only impact energy consumption but also influence training and deployment costs. Let's explore how these foundational decisions shape energy-efficient AI.

Task-specific models are inherently more energy-efficient than general-purpose ones. Instead of using a massive, all-encompassing model, tailoring your approach to the specific task can save substantial energy. For instance, sparse models can reduce computations by 5 to 10 times compared to dense models. Similarly, using a moderately complex Random Forest can strike a balance between accuracy and energy use, whereas overly complex models can escalate energy demands exponentially.

In many cases, a middle-ground approach offers the best results. For natural language processing tasks, opting for DistilBERT instead of BERT or choosing GPT-3.5/4 Turbo over GPT-3/4 provides comparable outcomes with significantly lower energy consumption.

Next, we’ll dive into compression techniques that further enhance efficiency.

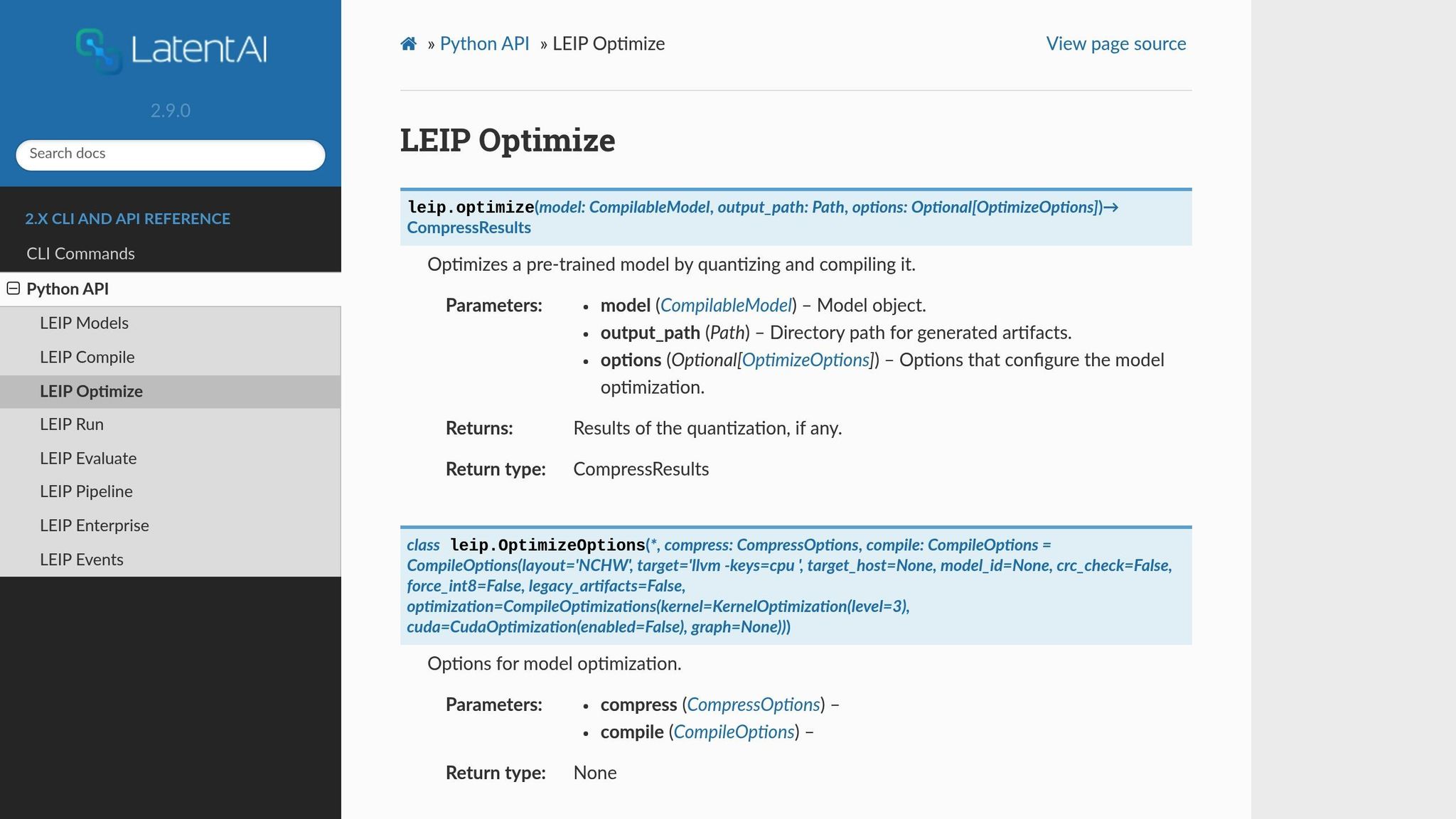

Model compression focuses on reducing a model's size and energy use without compromising its predictive power. One effective method is quantization, which converts model parameters from 32-bit floating-point (FP32) to 8-bit integer (INT8). This approach slashes memory requirements, as FP32 values take up 4 bytes, while INT8 uses just 1 byte.

Pruning is another powerful technique. It removes unnecessary parameters that contribute little to performance. For example, the Optimal BERT Surgeon (oBERT) achieves a 10× reduction in model size with less than a 1% accuracy loss. This pruning method also speeds up CPU inference by 10× with under a 2% accuracy drop, and even by 29× with less than a 7.5% accuracy loss compared to the original dense BERT model.

Knowledge distillation transfers the insights of larger, more complex models into smaller, more efficient ones. DistilBERT is a standout example, retaining 97% of BERT's performance while using 40% fewer parameters and providing 60% faster inference times. Similarly, OPTQ quantization can compress GPT models with 175 billion parameters in just about four GPU hours, reducing bitwidth to 3 or 4 bits per weight with minimal accuracy loss.

While Post-Training Quantization is simpler to implement, Quantization-Aware Training tends to deliver better results for large models.

Streamlined feature engineering can cut computational overhead by focusing on the most relevant data. Instead of processing excessive amounts of information, emphasizing fewer but more informative features can reduce energy use while maintaining high accuracy.

For example, studies have shown that by targeting key features, researchers achieved 99.46% of a top model's accuracy using only 15% of the original dataset's columns. This approach was particularly effective in predicting lifestyle indicators from wearable device data.

Strategies like prioritizing key features, generating derived variables, and using predictive imputation help minimize redundancy. Techniques such as binning (segmenting continuous data into ranges) can normalize noisy data and improve model performance. Additional methods, including feature splitting, handling outliers, and applying log transformations, can also reduce computational load while keeping models accurate and interpretable.

The architectural decisions you make today will define your AI system's energy footprint for years to come. By selecting task-specific models, leveraging compression techniques, and refining feature engineering, you can create systems that are both energy-efficient and cost-effective.

Training AI models demands a lot of energy, but refining the process can significantly cut costs. Beyond optimizing model architectures, focusing on how training is conducted can further enhance energy efficiency. Adjustments to training parameters, leveraging pre-trained models, and thoughtful scheduling of computational tasks can all reduce your energy expenses and environmental impact. Even small tweaks can make a big difference, complementing the architectural and deployment strategies mentioned earlier.

Fine-tuning training parameters is one of the quickest ways to save energy. Adjustments like modifying batch size or learning rate can reduce computational demands while maintaining a balance between energy consumption and training speed.

"Existing work primarily focuses on optimizing deep learning training for faster completion, often without considering the impact on energy efficiency. We discovered that the energy we're pouring into GPUs is giving diminishing returns, which allows us to reduce energy consumption significantly, with relatively little slowdown." - Jae-Won Chung, doctoral student in computer science and engineering

A great example of this in action is the Zeus optimization framework from the University of Michigan. Zeus dynamically adjusts GPU power limits and batch sizes during training, achieving up to a 75% reduction in energy consumption for deep learning models - all without requiring new hardware. The impact on training time is minimal. Another effective technique is early stopping, which halts training once validation improvements plateau. This prevents unnecessary GPU usage, a critical point since GPUs account for about 70% of the power used in training deep learning models. Across the industry, such parameter adjustments could reduce AI training’s carbon footprint by as much as 75%.

The next step in improving efficiency is using pre-trained models.

Transfer learning is a game-changer when it comes to reducing energy consumption. Instead of building a model from scratch, fine-tuning pre-trained models for specific tasks can cut training time from months to just weeks.

"Transfer learning isn't just about efficiency; it's a testament to the shared knowledge within the AI community. By building upon existing models, we advance the field collectively." - Dr. Amanda Rodriguez

This approach not only speeds up development but also reduces the computational load. By 2025, experts estimate that transfer learning could lower energy waste by up to 20% while improving renewable energy forecasting accuracy by 35%. When fine-tuning pre-trained models, starting with a lower learning rate helps retain previously learned features, reducing computational intensity without sacrificing quality. This is especially useful when working with smaller datasets, as it maximizes the use of existing information without requiring extensive retraining.

Once internal processes are optimized, scheduling strategies can provide even more savings.

Strategically timing training sessions can lower costs and reduce strain on the power grid. Running training tasks during off-peak hours takes advantage of lower electricity rates in the United States.

Microsoft’s Project Forge offers a compelling example. By virtually scheduling workloads during periods of available hardware capacity, the project has achieved utilization rates of 80–90% at scale. Additionally, scheduling training during times of high renewable energy generation - like daytime solar peaks in sunny regions - can further reduce the carbon intensity of computations and lower energy costs. Off-peak scheduling also allows data centers to benefit from utility pricing incentives and demand response programs, making this a win-win for both energy efficiency and cost savings.

Efficiently deploying a trained AI model is all about reducing energy usage. This can be achieved through techniques like model compression, adaptive scaling, and runtime optimization.

Compression techniques - such as pruning, quantization, and knowledge distillation - help shrink model size and cut energy consumption, making them perfect for resource-constrained environments like mobile and edge devices.

"Model compression is a crucial step in deploying AI efficiently on real-world devices." - Dinushan Sriskandaraja

Pruning removes unnecessary connections in neural networks, often reducing model size by 50% without sacrificing accuracy. For instance, ResNet models maintain their performance while significantly lowering computational demands. In some cases, pruning can eliminate up to 90% of parameters while still delivering competitive accuracy.

Quantization lowers the precision of weights and activations, speeding up inference and reducing memory usage. This approach is particularly effective for mobile and edge AI applications where hardware resources are limited.

Knowledge distillation creates smaller, faster "student" models by transferring knowledge from larger "teacher" models. For example, DistilBERT achieves nearly the same accuracy as BERT while using 40% fewer parameters. Similarly, TinyBERT is 7.5 times smaller and 9.4 times faster than BERT, yet retains 96.8% of its accuracy.

The best compression method depends on your needs. Quantization and knowledge distillation are ideal for mobile and edge AI, while pruning and low-rank factorization work well for large-scale systems. Combining techniques often delivers the most energy-efficient results.

Cloud environments provide a unique opportunity to align energy use with real-time demand. Instead of running systems at full capacity around the clock, adaptive scaling adjusts resources dynamically. A great example of this is Google's DeepMind AI, which reduced energy use for cooling its data centers by 40% in 2016 by learning from real-time temperature and workload data.

Dynamic resource allocation prevents energy waste from over-provisioning. By analyzing historical data and current workloads, AI systems can predict future energy needs and allocate resources accordingly. This is increasingly critical as data centers already consume about 1% of global electricity, with IT sector consumption expected to rise to 13% by 2030.

Virtualization further enhances efficiency by consolidating workloads onto fewer physical servers. Combined with energy-aware scheduling algorithms, this approach cuts down the number of machines running continuously, lowering costs while maintaining performance.

After deployment, runtime optimization ensures models continue operating efficiently. This step focuses on speeding up inference and reducing energy use without requiring retraining.

Graph optimization techniques like layer fusion, constant folding, and pruning streamline computations and improve memory usage during inference. For example, Meta's LLaMA-3.2-1B model originally required about 4GB of memory and took 52.41 seconds to generate a response. After optimization, response time dropped to 8 seconds, and the quantized version delivered results in just 1 second.

"Optimization and quantization are two essential techniques for making machine learning models faster, lighter, and easier to use in real-world applications." - Eddy Ejembi

Dynamic Voltage and Frequency Scaling (DVFS) adjusts GPU clocks to balance performance and energy use, improving efficiency by up to 30% without altering the model itself.

Runtime model distillation can shrink models by up to 90%, cutting energy consumption during inference by 50–60%. Other advanced algorithms can reduce energy use by 30.6% with only a slight 0.7% dip in accuracy.

Even the choice of model architecture impacts runtime efficiency. For instance, LLaMA-3.2-1B consumes 77% less energy than Mistral-7B, while GPT-Neo-2.7B uses more than twice the energy of some higher-performing models.

Choosing the right optimization technique means finding the right balance between energy efficiency, accuracy, and implementation complexity. Below, we break down several methods and their trade-offs, followed by a handy comparison table to help you decide which one fits your needs.

Quantization significantly reduces model size - by as much as 75–80% - with less than a 2% dip in accuracy, making it a go-to for edge devices and mobile apps. One major bank saw a 73% drop in model inference time by combining quantization with pruning.

Pruning trims 30–50% of model parameters while maintaining performance, though it requires careful tuning to avoid accuracy issues.

Knowledge Distillation compresses models to retain 90–95% of the original (teacher) model’s performance. However, this technique adds complexity since it involves training both teacher and student models.

Ensemble Methods can also be energy-efficient when kept compact. For example, ensembles with 2 or 3 models save 37.49% and 26.96% more energy, respectively, compared to larger ensembles.

"From a Green AI perspective, we recommend designing ensembles of small size (2 or maximum 3 models), using subset-based training, majority voting, and energy-efficient ML algorithms like decision trees, Naive Bayes, or KNN." - Rafiullah Omar, Justus Bogner, Henry Muccini, Patricia Lago, Silverio Martínez-Fernández, Xavier Franch

Feature Engineering can reduce the number of features by 34.36%, improving model performance while cutting energy use. In fact, key metrics showed a ~9% improvement after applying these techniques. When paired with hardware-level optimizations, the energy savings can be even greater.

Combining methods often yields the best results. For instance, applying quantization after pruning can shrink models by 4–5× and speed them up by 2–3×. An e-commerce platform demonstrated this by cutting computing resource use by 40% in its recommendation engines.

| Technique | Energy Savings | Accuracy Impact | Complexity | Use Cases |

|---|---|---|---|---|

| Quantization | 75–80% reduction in model size | Minimal loss (<2%) | Moderate | Edge devices, mobile apps, bandwidth-limited deployments |

| Model Pruning | 30–50% parameter reduction; 63% faster inference | May require fine-tuning | Moderate to High | Resource-constrained devices, strict size requirements |

| Knowledge Distillation | Achieves 90–95% of teacher's performance | Maintains accuracy | Moderate to High | High-accuracy applications with compact models |

| Ensemble Methods | Size-2 saves 37.49% energy vs. size-3; size-3 saves 26.96% vs. size-4 | Improved via majority voting | Moderate | Balancing accuracy with energy efficiency |

| Feature Engineering | 34.36% reduction in features | Key metrics improved (~9%) | Moderate | Data-heavy applications, preprocessing optimization |

The best technique for your project depends on your specific goals. Quantization is perfect for mobile apps with strict size constraints, while knowledge distillation suits cases where accuracy is non-negotiable. For quicker wins, feature selection or small ensemble methods are excellent starting points.

To measure success, benchmark energy efficiency improvements using inference time, memory usage, and FLOPS.

The path to energy-efficient AI isn't just about cutting costs - it's about building scalable systems that deliver value over time. As we've explored, applying the right optimization methods can bring down energy usage significantly without compromising performance. But making these strategies work often requires careful planning and, more importantly, specialized expertise.

Having the right expertise can make all the difference. Take Google's 2014 collaboration with DeepMind, for instance. By applying machine learning to real-time data from its data centers - tracking factors like temperature, humidity, and energy use - they managed to cut cooling energy consumption by 40%. This wasn't just a win for their bottom line; it also had a measurable environmental impact.

AI also has the potential to transform energy use in industries like power plants and light manufacturing. But unlocking these benefits isn’t simple. Challenges like regulatory hurdles, limited data access, interoperability issues, skills shortages, and resistance to change often stand in the way. This is where expert guidance becomes essential, helping businesses navigate these obstacles and achieve meaningful results.

The numbers speak for themselves - projects driven by skilled professionals consistently demonstrate the long-term benefits of energy-efficient AI. The key lies in balancing cost savings with environmental responsibility while maintaining strong performance. For companies aiming to meet sustainability targets, this is no small task. They must tackle the growing energy demands of AI while contributing to broader efforts to make the technology more energy-conscious.

One effective strategy is locating data centers near renewable energy sources. Another is sharing tools that enable others to adopt lower-energy AI models. Both approaches illustrate how thoughtful planning can align environmental goals with practical business needs.

Artech Digital is a leader in helping businesses achieve this balance. Their services combine AI-powered tools - like custom AI agents, advanced chatbots, and machine learning models - with energy optimization strategies. By focusing on locally developed, Industry 4.0-compatible systems and integrating IoT technologies, they enable companies to meet both performance and energy efficiency goals.

It's important to view energy optimization as an ongoing effort rather than a one-time fix. AI models evolve, and so do business demands. Continuous monitoring and adjustments are essential to maintaining the benefits over time. Companies that invest in expert-led integration - whether through trusted partners or in-house teams - can enjoy immediate savings while setting the stage for future growth.

For specific applications, smaller, energy-efficient models often strike the right balance between affordability, performance, and energy use. The optimization techniques we've discussed offer practical ways to achieve this equilibrium.

Optimizing AI models to use less energy while keeping performance intact often relies on three main techniques:

These strategies not only help save on energy costs but also make AI models more practical for use in resource-limited settings, like mobile devices or edge computing systems.

Scheduling AI training during off-peak hours can be a smart way to cut energy expenses. Electricity rates tend to drop during periods of lower demand, and leveraging these times not only saves money but also reduces stress on the power grid - a win for both your budget and the environment.

To make this approach work, begin by studying local energy demand trends to pinpoint off-peak hours. Automation tools are your best friend here; they can handle scheduling training jobs during these windows with little need for manual tweaking. Taking it a step further, integrating real-time energy data into your scheduling system can fine-tune the process, helping you achieve even greater savings and efficiency.

Reusing pre-trained models through transfer learning is a smart way to cut down on energy consumption in AI systems. Instead of building and training models from the ground up, this method takes advantage of models already trained on large datasets, saving both time and computational power.

Using pre-trained models for new tasks eliminates the heavy energy costs of training on massive datasets. This makes AI development not only faster but also more resource-efficient. It's particularly useful for tasks like image recognition, natural language processing, and other domains where these models perform exceptionally well.

.png)

.png)