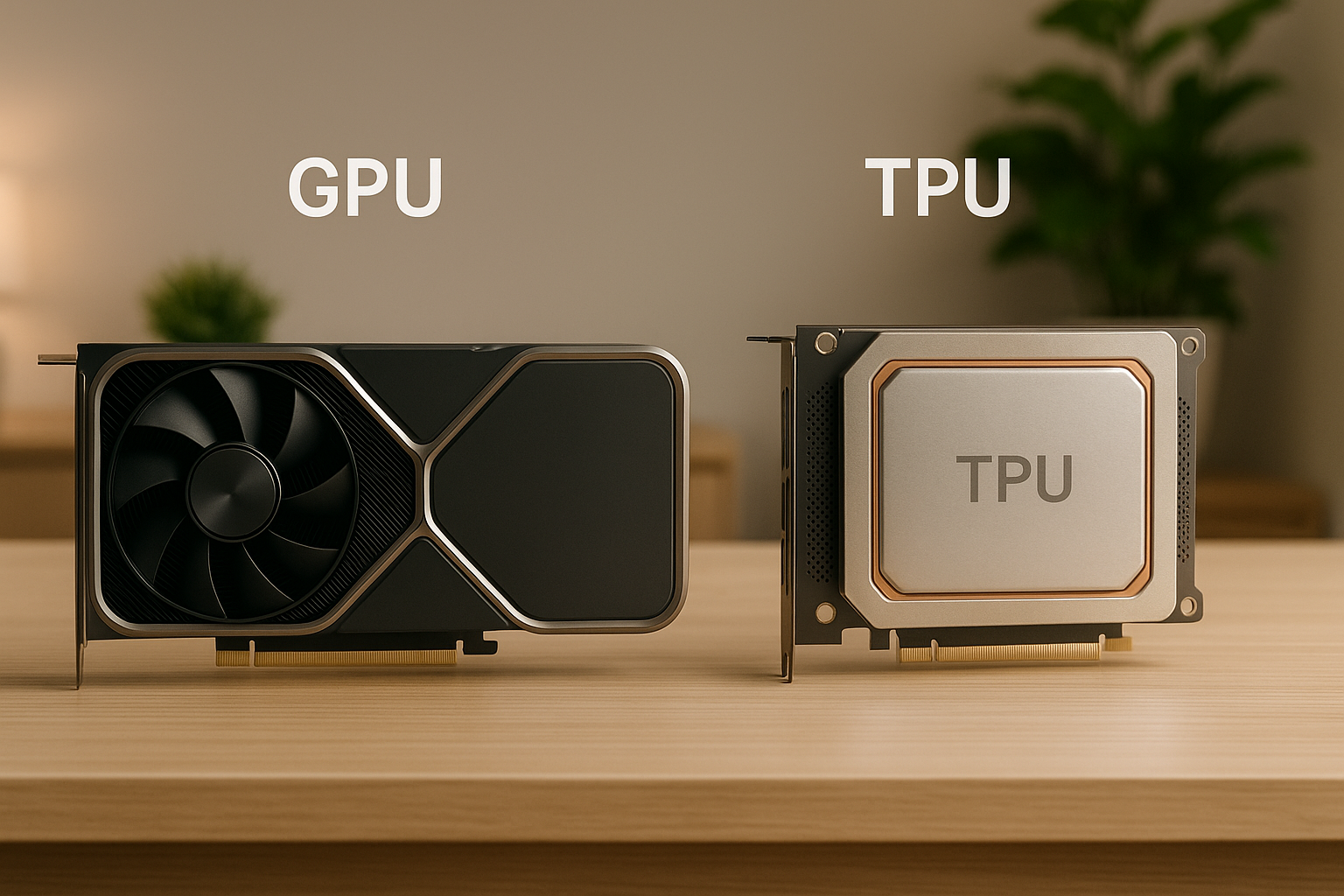

When deciding between GPUs and TPUs for AI workloads, energy efficiency is a key factor. GPUs are versatile, handling diverse tasks like training, inference, and real-time applications. However, they consume more power, with high-end models using up to 700W–1,000W. TPUs, designed specifically for machine learning, are more energy-efficient, consuming 175–250W per chip, and excel in large-scale training and inference tasks.

| Criteria | GPU | TPU |

|---|---|---|

| Power Usage | 300–1,000W | 175–250W |

| Performance-per-Watt | Moderate | High (2–3× GPU efficiency) |

| Precision Modes | FP32, BF16, INT8 | BF16, INT8 |

| Cooling Needs | High (air/liquid systems) | Lower (liquid standard) |

| Best For | Flexibility, real-time apps | Large-scale AI tasks |

Choosing the right hardware depends on your workload's size, precision needs, and budget for energy and infrastructure.

When evaluating AI hardware, one crucial factor to consider is how much computational power is delivered per watt of energy consumed. This metric is essential for understanding both operational costs and the broader environmental impact of these technologies.

Performance-per-watt is a standout metric for gauging the efficiency of AI hardware. TPUs often lead the pack, outperforming GPUs by 2–3× in this area. For example, TPU v4 delivers up to 1.7× better performance per watt compared to the NVIDIA A100 and achieves a 2.7× improvement over TPU v3. Similarly, TPU v7 offers double the efficiency of its predecessor and is nearly 30× more efficient than the first-generation TPUs.

The choice of precision mode also plays a significant role in energy consumption. Precision modes like FP32 prioritize accuracy but require higher power, while BF16 strikes a balance between energy use and accuracy. INT8, on the other hand, minimizes power consumption but sacrifices some precision. Selecting the right precision mode for your workload can significantly optimize energy usage, paving the way for both cost savings and efficient computation.

Energy consumption and associated costs are just as important as raw performance. Energy use is typically measured in kWh for smaller deployments and MWh for larger setups. High-end GPUs consume between 300–400W during operation, with some reaching up to 700W, and future models are expected to hit 800–1,000W. In contrast, TPU v4 chips use only 175–250W, averaging around 200W.

For businesses in the U.S., these differences can lead to substantial savings. With electricity costs ranging from $0.08 to $0.20 per kWh, a 400W GPU running continuously could cost about $280–$700 annually, whereas a 200W TPU v4 would cost roughly half that amount. Beyond power savings, TPU v4 setups are estimated to reduce overall costs by 20–30% compared to similar GPU deployments, thanks to lower power consumption, reduced cooling needs, and lower maintenance expenses. Additionally, TPU v4 can reduce CO₂ emissions by as much as 20× compared to on-premise data centers.

Cooling systems are another critical factor in energy efficiency. High-performance GPUs generate significant heat due to their higher power demands, requiring advanced cooling solutions like air, liquid, or hybrid systems. On the other hand, TPUs are designed with energy efficiency in mind and often include liquid cooling as a standard feature. This not only lowers cooling requirements but also reduces related costs.

In cloud computing environments, TPUs demonstrate 30–40% better energy efficiency than GPUs when accounting for the entire system infrastructure.

Modern GPUs are reshaping energy-efficient AI performance by combining advanced architecture with smart configurations. Models like NVIDIA's H100 and A100 series showcase how far GPU energy efficiency has come, offering high performance while keeping energy waste to a minimum.

There are several strategies to get the most out of your GPU while keeping energy use in check:

These methods not only save energy but also highlight how GPUs can be fine-tuned for a variety of AI workloads.

GPUs shine in scenarios where flexibility and performance are key. They can handle a mix of tasks like neural network training, inference, computer vision, and traditional computing, all on the same hardware. This adaptability allows for energy-efficient setups across diverse workloads.

For research and development, GPUs are ideal when high-precision calculations (e.g., FP64 or FP32) are necessary, such as in scientific computing or experimental AI models.

Another major advantage is their compatibility with popular AI frameworks like PyTorch, TensorFlow, JAX, and CUDA. This broad support can save development time, especially for teams juggling multiple AI projects with different requirements.

In real-time applications, GPUs deliver low-latency performance, making them a go-to choice for tasks like autonomous vehicle systems, real-time video processing, or interactive AI tools.

Cost-conscious deployments also benefit from GPUs. Their availability and competitive pricing in cloud environments, combined with a mature ecosystem, help lower training costs and make operational expenses more predictable.

Finally, GPUs are a natural fit for hybrid AI workloads that combine traditional machine learning with deep learning. This versatility allows organizations to streamline their compute infrastructure, reducing the need for multiple specialized hardware setups.

Google's Tensor Processing Units (TPUs) are purpose-built for energy-efficient AI computing. Unlike GPUs, which originated from graphics processing, TPUs are specifically designed for machine learning tasks. This targeted design can result in significant energy savings for certain AI workloads. Here's a closer look at how the TPU architecture supports its energy-efficient performance.

TPUs achieve their energy efficiency through a unique architecture that incorporates systolic arrays. These arrays are highly effective at optimizing matrix multiplications, a key operation in neural networks, while reducing energy loss caused by data movement. Additionally, TPUs utilize precision modes like BF16 and INT8, which consume less energy compared to higher-precision operations.

Another key feature is the inclusion of high-bandwidth on-chip memory. This design keeps frequently used data close to the processing units, cutting down on the energy required to access external memory. TPUs also benefit from a predictable execution model, which simplifies power management compared to the more dynamic scheduling needs of GPUs.

To handle large-scale AI training, TPUs often use a pod architecture. These pods connect multiple TPU chips via high-speed interconnects, allowing the system to scale efficiently while maintaining energy-conscious performance. The custom interconnects are designed to reduce communication overhead, ensuring smooth data flow.

This scalable approach is particularly effective for training massive language models. It enables computations to be distributed across numerous processing units, enhancing both speed and energy efficiency. Additionally, preemptible instances allow workloads to run during periods of low grid demand, which, when paired with renewable energy sources, can further lower costs and reduce environmental impact.

TPUs shine in scenarios that take full advantage of their specialized design. They are particularly effective for large-batch training, where their systolic arrays can handle significant amounts of data simultaneously. This makes them ideal for tasks such as training transformer models or other architectures requiring high throughput.

Tasks like natural language processing and predictable inference workloads also benefit from TPUs, especially when using frameworks like TensorFlow or JAX, which are optimized for TPU performance. However, TPUs may not be the best choice for workloads involving frequent model updates or mixed precision operations. Their design is most effective when models and precision settings remain stable, making them a strong option for research and large-scale training projects where energy efficiency is a key consideration. This makes TPUs a compelling alternative to GPU-based solutions in specific use cases.

Choosing between GPUs and TPUs for energy-efficient AI workloads requires a clear understanding of how each performs under different conditions. These processors shine in distinct scenarios, and their energy usage varies depending on factors like workload type, precision settings, and deployment scale. Here's a closer look at how they compare.

GPUs often operate in full-precision (FP32), which can draw significant power. However, they also support mixed or lower-precision modes like FP16, which help improve energy efficiency. TPUs, on the other hand, are designed to run efficiently in lower-precision modes like BF16, maintaining steady energy consumption even as batch sizes grow. GPUs offer developers more control to adjust precision levels, allowing for a balance between computational accuracy and energy savings.

These precision differences can have a noticeable impact on energy consumption. For instance, workloads running in full precision on GPUs may use substantially more power than those on TPUs optimized for lower-precision tasks.

GPUs scale through multi-device configurations, such as NVLink or PCIe. However, when used in large clusters, communication overhead can reduce their energy efficiency. TPUs are built with large-scale deployments in mind, offering energy-conscious performance that's well-suited for extensive AI workloads.

In terms of deployment flexibility, GPUs are versatile. They can be used in smaller setups, even a single card, making them accessible for a variety of computational needs. TPUs, while highly efficient for large-scale tasks, are typically deployed in pod-based setups, which are better suited for organizations handling massive AI projects.

Infrastructure is another critical factor in energy efficiency. GPU systems often require additional cooling and robust electrical setups, which can increase both cost and environmental impact. In contrast, TPUs have a more consistent power draw, simplifying facility planning and reducing energy-related expenses.

Ultimately, the decision between GPUs and TPUs depends on the specific workload requirements and a thorough evaluation of the total cost of ownership. This includes not just the hardware but also the associated infrastructure and operational energy costs.

When US businesses evaluate GPUs and TPUs, the decision goes beyond just the hardware price tag. Factors like energy consumption, cooling needs, and operational costs all play a role in determining the overall expense. A thorough analysis of these elements is essential for understanding the total cost of ownership.

The financial picture isn’t complete without considering all the associated costs in dollars. While high-end GPUs typically require a hefty upfront investment, TPUs are often offered through cloud-based services with usage-based pricing. This shifts the expense from a large initial outlay to ongoing operational costs.

But hardware is only part of the equation. Power, cooling, and infrastructure expenses can add up quickly, especially for GPU systems that demand robust cooling and power setups. On the other hand, cloud-based TPUs eliminate many of these upfront infrastructure costs. However, businesses must carefully monitor pay-as-you-go pricing for continuous workloads to avoid unexpected expenses.

Operational costs over time can heavily influence the total investment. Balancing these costs with long-term goals is key to making strategic decisions about AI hardware.

AI workloads come with varying energy demands. Training large language models, for instance, is energy-intensive. TPUs often handle such tasks more efficiently, making them an attractive option for energy-conscious businesses.

Fine-tuning models, while less demanding than full training, still highlights the energy efficiency gap between GPUs and TPUs. This difference becomes even more pronounced when operations are scaled across multiple projects or run continuously.

Inference tasks, which are often performed 24/7, place a premium on energy efficiency. TPUs generally achieve comparable performance with lower power use, offering potential long-term savings for businesses running constant inference services.

For batch processing workloads, which fall between training and inference in energy demands, the total energy impact depends on factors like workload frequency and data size. In many cases, TPUs can provide an edge in efficiency.

The environmental impact of AI hardware choices is another critical consideration. Carbon emissions and sustainability are influenced by the type of hardware and the carbon intensity of the electricity used. For instance, GPU-heavy operations in regions with carbon-intensive energy sources can lead to a larger environmental footprint. Additionally, the cooling requirements for GPU systems can further increase energy consumption.

In contrast, TPUs - especially those deployed through optimized cloud services - can help reduce carbon emissions. For businesses prioritizing environmental responsibility, these distinctions are significant. The manufacturing footprint of GPUs versus the shared infrastructure model of TPUs also factors into the long-term environmental cost.

Selecting the best hardware for energy-efficient AI workloads calls for a thoughtful and systematic approach. It’s not just about raw performance - you need to weigh computational power, energy consumption, and cost-effectiveness to match your specific needs.

Start by evaluating the key characteristics of your AI workload. Model size plays a major role. Large language models with billions of parameters often thrive on TPU architectures, while smaller models (under 1 billion parameters) tend to run efficiently on modern GPUs. Be sure to assess factors like memory requirements and throughput as well.

Next, consider precision requirements, as they can significantly impact energy efficiency. Precision modes like BF16 or INT8 offer a balance between accuracy and energy savings. Benchmark your model’s performance at both lower and higher precision levels to determine what works best.

Communication overhead is another factor to analyze. Workloads with heavy inter-node communication often favor TPU pods, while single-node inference tasks or those requiring frequent CPU-GPU exchanges may perform better on GPUs.

Deployment flexibility is also key. If you need rapid deployment or frequent model updates, GPUs provide greater adaptability. On the other hand, for steady, production-level workloads that run continuously, TPUs tend to deliver better energy efficiency over time. These factors lay the groundwork for deciding between GPUs and TPUs.

Once you’ve assessed your workload, use the insights to guide your hardware choice. For training workloads, especially those involving transformer architectures or attention mechanisms, TPUs often provide better energy efficiency. This includes tasks like training large language models, machine translation, and other extensive natural language processing tasks.

For inference workloads, the considerations shift. High-throughput batch inference generally leans toward TPUs, while low-latency, real-time inference tends to favor GPUs. Evaluate your expected queries per second and acceptable latency thresholds to make an informed decision, always keeping energy efficiency in mind.

Mixed workloads - those involving both training and inference - can be tricky. GPUs are often more versatile for these scenarios. However, if you can separate the tasks, using TPUs for training and GPUs for inference can help optimize energy usage across the pipeline. Additionally, for large-scale workloads, TPU pods excel, whereas GPU clusters allow for more granular scaling.

Once you’ve chosen the right hardware, focus on optimizing its usage to get the most out of your energy budget. For GPUs, tools like NVIDIA’s MPS can help share resources and reduce idle time, which improves overall energy utilization.

Batch size optimization is another critical factor. Larger batch sizes typically enhance throughput per watt, though they may also increase memory usage and latency. Experiment with different batch sizes to find the most efficient balance. For TPUs, batch sizes that are multiples of 128 often yield the best results.

Take advantage of power capping features on modern GPUs to set limits on maximum power consumption. This allows you to balance performance with your energy and cooling constraints, rather than running at full power unnecessarily.

Schedule non-urgent tasks during off-peak hours to lower operational costs and reduce environmental impact. This approach can help you save on energy expenses while maintaining efficiency.

Finally, monitor and measure your energy consumption consistently. Tools like nvidia-smi for GPUs or cloud-based monitoring services for TPUs can track power usage patterns. Establish baseline measurements and regularly check whether your hardware setup meets your energy efficiency goals.

For more complex AI pipelines, consider hybrid approaches. For example, you could use TPUs for energy-intensive training phases and switch to GPUs for inference or fine-tuning tasks that require greater flexibility. This strategy helps strike a balance between energy efficiency and operational needs across your entire workflow.

Deciding between GPUs and TPUs for energy-efficient AI setups isn't just about picking hardware - it's about making informed choices that align with your goals. Artech Digital specializes in helping US businesses navigate these decisions to achieve top-notch performance while keeping energy use in check. Here's how their services can make a difference in optimizing energy-efficient AI deployments.

Artech Digital's AI & Data Systems services tackle the challenges of energy-efficient AI hardware head-on. Their solutions include creating custom AI agents, implementing RAG (retrieval-augmented generation) systems, and fine-tuning large language models (LLMs) to fit the specific needs of US companies.

When building custom AI agents, the team dives deep into hardware requirements, always keeping energy consumption in mind. Whether you're automating customer service, analyzing data, or generating content, they assess if the flexibility of GPUs or the efficiency of TPUs is the better fit. This analysis takes into account both upfront costs and long-term operational savings.

For tasks like fine-tuning LLMs or developing private models, Artech Digital ensures the hardware setup is just right. They consider model size, how often it needs training, and precision requirements - all while prioritizing energy efficiency. For example, massive language models with billions of parameters often run more efficiently on TPUs, while smaller, frequently updated models might perform better on GPUs. Balancing computational power, energy use, and data security is key to ensuring these systems meet performance goals and compliance standards.

Artech Digital doesn't stop at integration - they provide ongoing support to ensure your AI systems remain energy-efficient throughout their lifecycle. Their Launch & Scale process works seamlessly with major cloud platforms like Azure and AWS, focusing on smart resource allocation to minimize energy use.

Their approach to optimization delivers long-term value. Through Team Augmentation, they provide experienced AI and FullStack engineers who collaborate with your team to implement energy-conscious strategies effectively.

For companies seeking strategic guidance, their Fractional CTO Services offer executive-level expertise in designing energy-efficient AI systems. This service is especially useful for mid-sized businesses that need high-level technical direction without hiring a full-time CTO.

Artech Digital also excels in hybrid deployment strategies. They design systems where TPUs handle energy-intensive training tasks, while GPUs manage inference tasks that require more flexibility. This balanced setup ensures energy efficiency across your entire AI pipeline, from training to deployment.

Deciding between GPUs and TPUs for energy-efficient AI workloads comes down to understanding the specific needs of your tasks. The data paints a clear picture of when each option shines.

TPUs lead the pack in energy efficiency for AI-focused tasks. They deliver 2–4 times better performance per watt compared to GPUs. Google's Ironwood TPU pushes this even further, achieving nearly 30 times the power efficiency of the first Cloud TPU introduced in 2018. As Amin Vahdat, VP/GM of ML, Systems & Cloud AI at Google, puts it:

"Ironwood perf/watt is 2x relative to Trillium, our sixth-generation TPU announced last year. At a time when available power is one of the constraints for delivering AI capabilities, we deliver significantly more capacity per watt for customer workloads".

Power consumption differences are striking. TPU v4 units typically consume between 175 and 250 watts, while high-end GPUs can draw as much as 300–400 watts. This lower power usage for TPUs translates into meaningful operational cost savings.

For U.S. businesses, environmental considerations are increasingly important. With rising demands for sustainable AI infrastructure, TPUs can help reduce carbon emissions by up to 40% compared to GPU-based systems. This is a critical factor when training large-scale models, which can consume hundreds of megawatt-hours of electricity.

Ultimately, your workload determines the best choice. TPUs excel in scenarios like AI inference, training large language models, and tensor-heavy operations where energy efficiency is key. On the other hand, GPUs are better suited for tasks requiring flexibility, frequent updates, or non-AI workloads.

Keep an eye on hardware advancements, as they can rapidly shift the landscape. For example, Google's upcoming TPU v6 is expected to improve energy efficiency by 2.5 times compared to TPU v4. Regularly benchmarking your workloads and using cloud vendor pricing tools can help you make informed decisions.

In an era where energy efficiency is more critical than ever, choosing the right hardware isn't just a technical decision - it’s a strategic one. Businesses that prioritize power-efficient solutions today will be better positioned to meet future AI demands and comply with evolving standards for sustainable computing.

Cooling systems significantly impact the energy efficiency and operating costs of GPUs and TPUs. One technology gaining traction is liquid cooling systems. These systems are excellent at managing heat, cutting down energy usage, and enabling higher performance densities. In fact, they can lower server energy consumption by anywhere from 4% to 15%, which translates into noticeable savings on operating costs.

When it comes to energy efficiency, TPUs stand out. They typically consume between 175 and 250 watts, which is considerably less than high-end GPUs that draw between 300 and 400 watts. This reduced power usage means TPUs generate less heat, easing cooling requirements. For data centers aiming to optimize energy use for AI workloads, TPUs offer a more cost-effective solution. By reducing cooling demands, they help lower energy expenses and contribute to a smaller environmental impact.

When choosing between GPUs and TPUs for energy-efficient AI workloads, you need to weigh the task requirements and hardware capabilities carefully. TPUs are built with energy efficiency in mind, excelling at tasks like neural network training and inference, especially in large-scale AI projects. They deliver more performance for every watt of power, making them a strong choice for specialized AI operations.

Meanwhile, GPUs are more versatile, handling a broader range of tasks beyond AI. However, they tend to use more power during demanding AI-specific tasks. To make the right decision, consider the scale of your project, the complexity of your AI tasks, and your energy consumption priorities.

To strike the right balance between computational power and energy efficiency, businesses need to choose hardware that matches their specific AI workload. TPUs are a great option for tasks that require less energy per computation, making them ideal for large-scale projects where conserving energy is a priority. Meanwhile, GPUs can be fine-tuned with techniques like using lower precision and activating energy-saving modes, which help improve their performance relative to power usage.

Additional approaches include using energy-conscious hardware, taking advantage of accelerated computing, and employing effective thermal management systems. By aligning hardware capabilities with the demands of their workloads, businesses can maximize performance while keeping energy consumption in check.

.png)

.png)